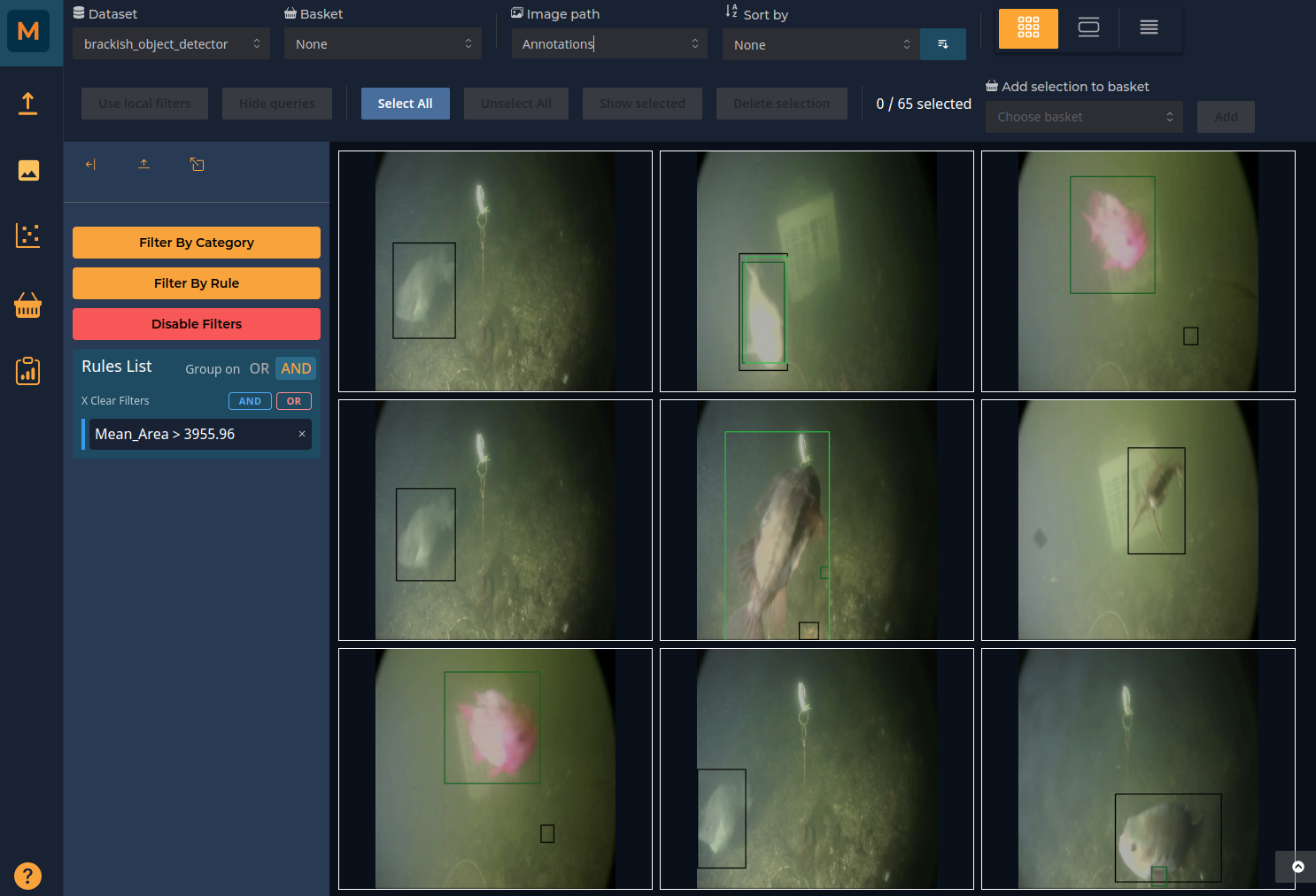

Data Preparation: Object Detection

In this section we describe how to create a CSV file from trained object detection tasks, which can be uploaded into Metascatter. We provide scripts for standard classification model architectures (with user-provided weights) for:

You will only need to edit some configuration files to point to your data and model weights.

PyTorch

Downloads

- PyTorch classifcation CSV creation script: Download

- Template configuration file: Download

- Template transforms file: Download

- Requirements file: Download

Usage: python create_csv_torch.py 'path_to_config_file.ini'

Quick Start

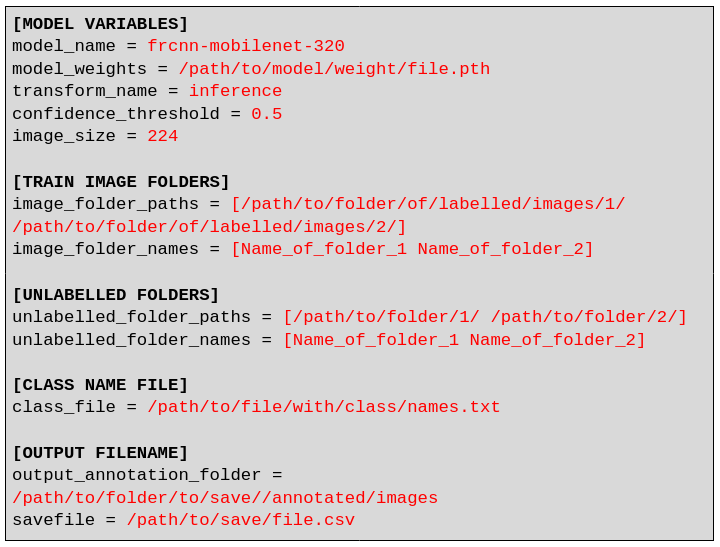

To create a CSV from a Pytorch object detection model, simply edit the variables in red in the template configuration file:

Prepare CSV file

We provide scripts to create a CSV file that works with metascatter, given image folders and models.

An example script for PyTorch classifcation models can be downloaded here: PyTorch script download. You should not ordinarily need to edit this file.

The following Python3 libraries are required:

torch

torchvision

pillow>=9.1.0

pandas>=1.4.2

sklearn

umap-learn

The following models can be used with default or with your own trained weights:

"frcnn-resnet": torchvision.models.detection.fasterrcnn_resnet50_fpn

"frcnn-mobilenet-320": torchvision.models.detection.fasterrcnn_mobilenet_v3_large_320_fpn

"frcnn-mobilenet": torchvision.models.detection.fasterrcnn_mobilenet_v3_large_fpn

"retinanet": torchvision.models.detection.retinanet_resnet50_fpn

"fcos": torchvision.models.detection.fcos_resnet50_fpn

"ssd-vgg": torchvision.models.detection.ssd300_vgg16

"ssdlite": torchvision.models.detection.ssdlite320_mobilenet_v3_large

You will need to supply a configuration file and a file describing the transforms for inference, for which templates can be found below:

Usage: python create_csv_OD_torch.py 'path_to_config_file.ini'

The create_csv_OD_torch.py script should not be changed. Edit the configuration file as below.

[MODEL VARIABLES]

model_name = frcnn-mobilenet-320

# Choose from: frcnn-resnet, frcnn-mobilenet-320, frcnn-mobilenet, retinanet, fcos, ssd-vgg, ssdlite

model_weights = /path/to/model/weight/file.pth

transform_name = inference

# Should correspond to transforms_config.py

confidence_threshold = 0.5

image_size = 224

Please use one of the standard model architectures listed above and provide the path to your trained weights. The transform_name field should correspond to the name given in transforms_config.py.

[TRAIN IMAGE FOLDERS]

# paths to labelled images and annotations

image_folder_paths = [/path/to/folder/of/labelled/images/1/ /path/to/folder/of/labelled/images/2/ /path/to/folder/of/labelled/images/3/]

image_folder_names = [Name_of_source_of_folder_1 Name_of_source_of_folder_2 Name_of_source_of_folder_3]

# e.g. train and val folders For multiple locations, please separate folders and sources by a space.

Inlcude a list of folders which store the labelled images you want to use. The folder structure of each image should be in the following class format:

You can provide several folders, e.g. if you have different folders for TRAINING and VALIDATION images. You can reference these by entering a corresponding name in the field image_folder_names. Please ensure there are the same number of source names as folders provided. The folders and names should be separated by a space.

[UNLABELLED FOLDERS]

unlabelled_folder_paths = [/path/to/folder/of/unlabelled/images/1/ /path/to/folder/of/unlabelled/images/2/]

unlabelled_folder_names = [Name_of_source_of_folder_1 Name_of_source_of_folder_2]

# Unordered images: Folder->Image. For multiple locations, please separate folders and sources by a space.

Similarly, you can include one or many folders for unlabelled test data. Leave blank if there are no such folders.

In order to output class names (instead of numbers) to the CSV, you will need to provide a class list file.

[CLASS NAME FILE]

class_file = /path/to/file/with/class/names.txt

The list of classes should be in order corresponding to the output of the model:

Class0

Class1

Class2

...

ClassN

Finally, enter the filename and path of the output csv file and the folder to store the images with bounding box annotations drawn on them

[OUTPUT FILENAME]

output_annotation_folder = /path/to/folder/of/images/with/bounding_boxes

savefile = /path/to/save/file.csv

This works with standard architectures of the models named above with either default or trained weights. For bespoke architectures, please see Data Preparation.