Data Preparation: Other

The input to Metascatter is a CSV file containing:

- Image paths

- 2D co-ordinate representations of the images (obtained using dimensionality reduction on raw images or model features)

- Associated metadata

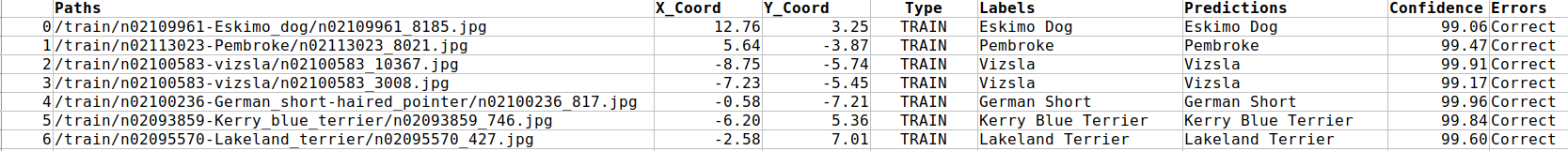

For instance, below is an example CSV for the dog breed classification example:

In this case, the

In this case, the Paths column contains the relative paths to the images (you will be able to specific the path prefix when loading the csv). You could also use the full paths to an accessible online storage. X-Coord and Y-Coord provide the co-ordinates for the scatterplot. These three fields are the minimum needed for metascatter to run.

Other columns of the CSV can contain any other type of metadata. For instance, this could be data output from the model, e.g. predictions and confidence of predictions, or image information such as ground truth labels, acquisition method, associated demographic data and so forth. The more metadata, the more analyses you can do in Metascatter.

For image classification tasks, you can produce an example CSV file using the scripts provided in Image Classification.

Creating the Image Co-ordinates

In this section we will discuss how to create the 2D co-ordinate representations for each image. this involves:

The co-ordinates can be obtained by either using image intensities or features extracted by passing the images through a model as in Feature Extraction. After extracting either the intensity or model features, these will have to be reduced to two-dimensional co-ordinates as described in Dimensionality Reduction.

Feature extraction

Machine learning methods typically work by transforming the input data to a reduced number of features on which the task (e.g. classification, object detection, segmentation) can work more succesfully. For instance, for a classifier that distinguishes between images of zebras and horses, features representing a stripy pattern would work better than features representing legs and tails.

In deep learning, these features are optimised in the training process (rather than being pre-defined as in conventional machine learning techqniques). Metascatter allows you to evaluate the performance of a deep learning model by visualising how the model 'sees' the images in the dataset in terms of these features. For a well-performing model, you would expect a clear boundary between the features of horses and the features of zebras.

Different layers of a trained deep learning model will produce different features. You can obtain these features by running each image through the model up to the relevant layer.

For example, for classification models such as VGG16, we can pass the images through a feature model up to the layer before the final classification:

(classifier): Sequential(

(0): Linear(in_features=25088, out_features=4096, bias=True)

(1): ReLU(inplace=True)

(2): Dropout(p=0.5, inplace=False)

(3): Linear(in_features=4096, out_features=4096, bias=True)

================================================================

# (4): ReLU(inplace=True)

# (5): Dropout(p=0.5, inplace=False)

# (6): Linear(in_features=4096, out_features=1000, bias=True)

)

Some scripts for doing this with common example models are given below:

Classification

Multi-class classification (120 classes) using PyTorch Mobilenet. We recommend using the last layer before the classification head. [Download script here]

Segmentation (Keras)

U-Net for pixel-level segmentation of images of small animals. Download: [Script]

[Model]

[Data]

Tip

For a deep learning segmentation model, we typically choose the output features after the final encoding layer (before any upsampling).

In keras, you can output the features by creating a sub-model up to the required named layer:

model = unet()

model.load_weights('my_trained_model.h5')

submodel = Model(model.inputs, model.get_layer('conv2d_transpose_1').output)

features = submodel.predict(np.expand_dims(img,0))

Object Detection (PyTorch)

Object detection using Ultralytics YOLOv5 small [Download script here] [Example CSV here]

No model

If you do not have a trained model, you can use the raw image (or after some pre-processing) intensities as features instead.

features = img.flatten()

Dimensionality reduction

The resulting data (features or image intensities) might still be several thousands long for each image. For instance, in the above VGG16 classification model, 4096 features are extracted from layer (3). To visualise these on a 2D plot, we need to reduce the dimensionality while preserving the structure of relationships between data points.

We therefore apply dimensionality reduction to these features. We use T-SNE to obtain a 2D representation of the data that compactly describes the underlying structure. In python, you can use the sklearn library.

from sklearn.manifold import TSNE

tsne = TSNE(n_components=2, verbose=1, perplexity=50, n_iter=500)

X_coords = tsne.fit_transform(features)